This Bloomberg Law page tracks current legal opinions and guidance offered by the State Bar Associations across the United States.

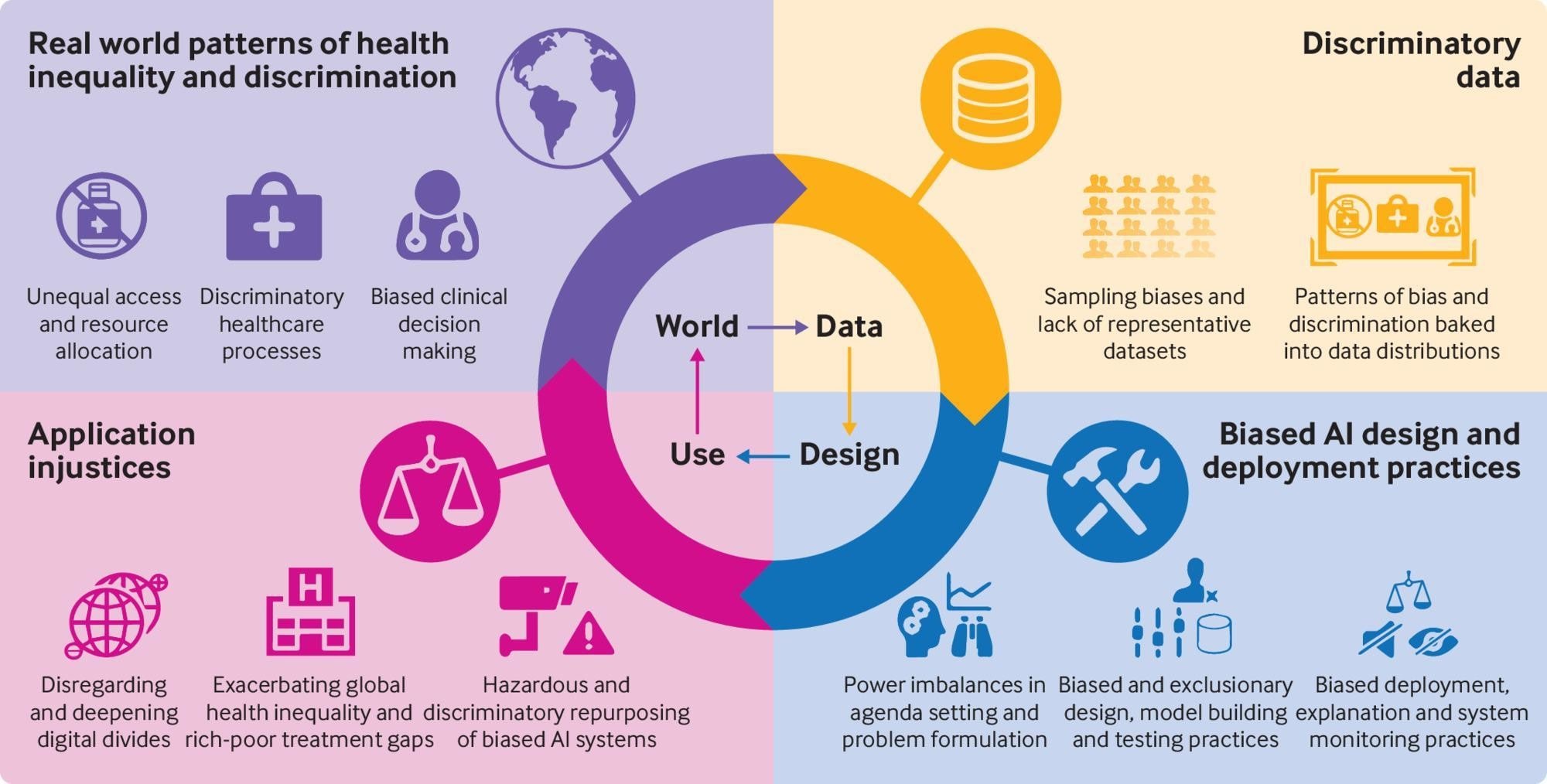

How can an AI be biased? It depends on how the AI learns. Essentially, an AI can be trained on a dataset that may bias it towards unfairly assessing context or apportioning resources.

The creation and maintenance of AI has far reaching consequences for the environment. Increased use of electricity strains the grid and creates further demand for power consumption for data centers. A Generative AI training cluster can use up to seven or eight times more energy than a normal computing workload according to Norman Bashir, a Computing and Climate Impact Fellow at MIT Climate and Sustainability Consortium (MCSC) and a postdoc in the Computer Science and Artificial Intelligence Laboratory (CSAIL). (See Zewe article) With increasing demand for datacenters and AI development, it is likely that energy demand will only increase in coming years.

Deepfakes are images, videos, or audio generated by an AI using deep learning. This can be harnessed for malicious intent and has been used by scammers and other bad actors for their own purposes. This is why the University of Florida does not allow students to upload personal photos to Co-Pilot.

AI poses many similar and novel issues for privacy. In the same way that the internet has posed a risk to privacy by encouraging people to upload personal data in a public space, AI also utilizes large datasets of personal information and behavior to train itself and generate content. Deep fakes, and other forms of generative AI can allow for scammers to more easily target victims. Further, AI is often using data without the consent of the generators of the data.

Source: Leslie, D., Mazumder, A., Peppin, A., Wolters, M. K., & Hagerty, A. (2021). Does “AI” stand for augmenting inequality in the era of covid-19 healthcare?. bmj, 372

|